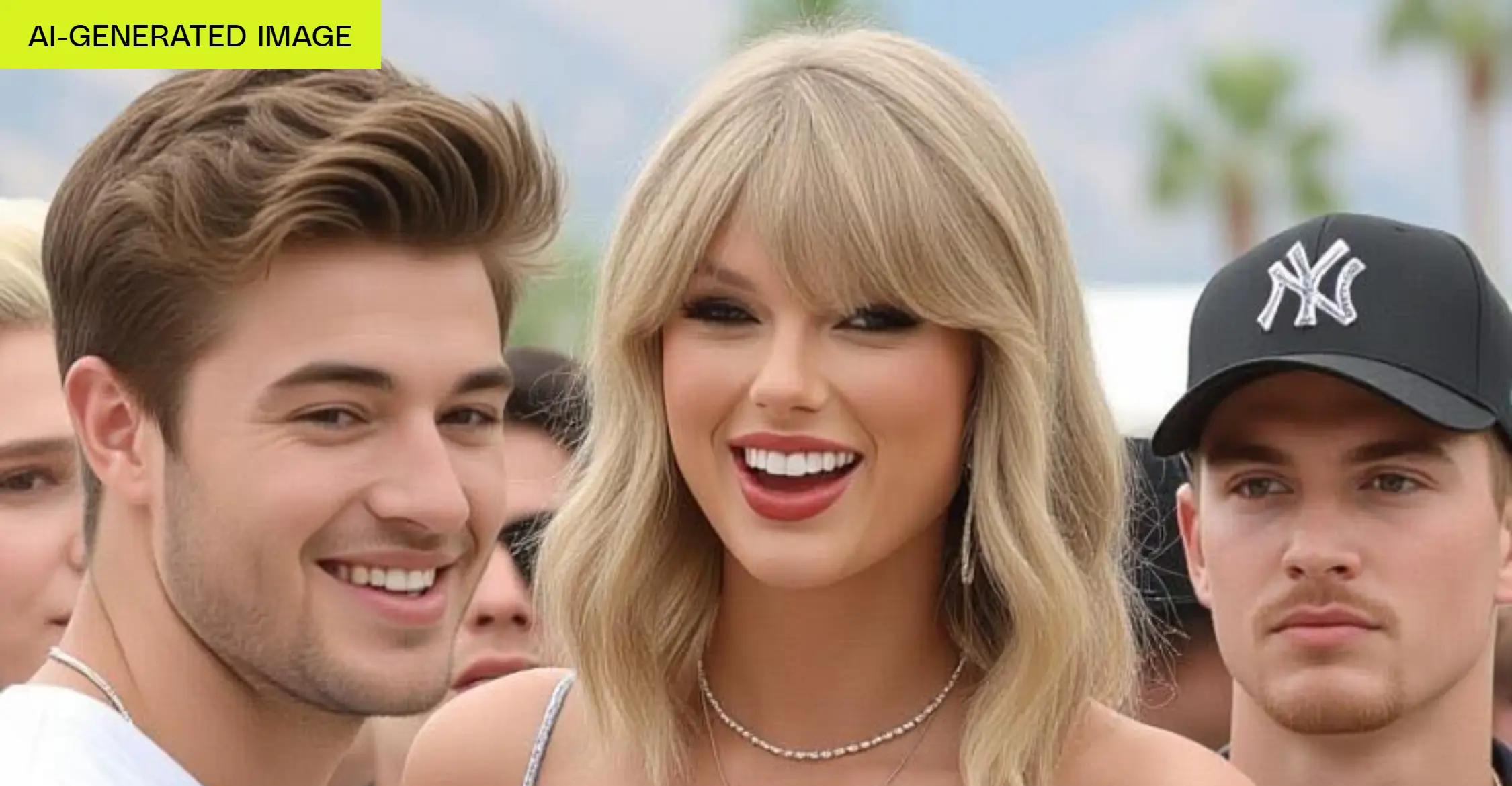

The new video generation function in the XII GROK was in the center of the scandal – without a clear request, she generated naked images of Taylor Swift.

The Verge journalist Jess Weserbed reported that she received such results “from the first use”, just choosing a Spicy preset and confirming the age.

The input was innocent: “Taylor Swift celebrates Coachella with friends,” but Grok Imagine created dozens of images with a half -naked singer, and then a video where she “tears off her clothes and dances in thongs” in front of the crowd.

All this happened without bypassing restrictions or obvious violations by the user.

This is especially alarming against the background of last year’s scandal with Swift diphrises when X promised zero tolerance for such content. Despite this, GROK is still inclined to generate NSFW content on indirect requests.

AI refuses to generate outright images of children or respond to direct requests for obscene materials, but, as the case of Swift shows, the filtration clearly works unstable.